The Technology and Infrastructure behind Data Pipelines

Brendan Stennett

Co-founder, CTO & big data specialist

Brendan explains some of the considerations that need to be made when building out a data pipeline, including open source versus proprietary software, data storage and ETL tooling.

Brendan explains some of the considerations that need to be made when building out a data pipeline, including open source versus proprietary software, data storage and ETL tooling.

Subscribe to watch

Access this and all of the content on our platform by signing up for a 7-day free trial.

The Technology and Infrastructure behind Data Pipelines

20 mins 47 secs

Key learning objectives:

Understand the difference between open source and proprietary

Define the importance of the data pipeline and its individual components

Overview:

Data pipelines require an extensive amount of tooling in order to support efficient data usage. The following covers what it means to actually get data into an environment in a prepared way so data scientists can begin their analyses.

Subscribe to watch

Access this and all of the content on our platform by signing up for a 7-day free trial.

What is the Difference between Open Source and Proprietary offerings?

Open source - The core code base is made publicly available not only for everyone to see, but also to contribute features, bug fixes, and security audits. This allows for the combined effort of many contributors to share in the burden of developing complex pieces of software. This flexibility and openness, although great for particular solutions and teams, is checked by the requirement of companies who work with open source software to have sophisticated engineering knowledge available in-house in order to set up these solutions and maintain them throughout their lifecycle. Although they may be cheaper than proprietary solutions, they require heavy operational support to maintain. This creates a classic build vs. buy scenario that must be carefully weighed differently by every company.

Proprietary offerings - Although none of the codebase is open for you to see, you can rely on the company supplying the software to continue to add bug fixes and feature development to the product you are buying. With proprietary solutions, one of the greatest benefits is that you are partnering with a company whose core capacity is to deliver a product that completes a specific function. This platform is their core competency and they have entire teams dedicated to building it out and maintaining it into the future. This always translates to a higher quality product and the ability for you at your company to focus resources on growing your core competency, not becoming open source technology experts.

What are some Examples of Data Storage, and what are its benefits?

The majority of the open source offerings are in the Hadoop ecosystem, which is a data analytics stack revolving around a distributed file system. Think of the file system on your computer, but designed to be run on many computers, in which very large files can be split among them.

Most cloud providers will also offer managed analytics databases as part of their cloud offering. Google Cloud Platform offers BigQuery, a fully-managed offering that boasts infinite scalability, both in terms of size of data, and concurrency. Amazon Web Services offers Redshift, a columnar database offering impressive query performance and easy setup. And Microsoft Azure offers SQL Data Warehouse which is also fully managed and boasts elastic scalability, which simply means it will grow and shrink as needed.

Ultimately, the benefits of setting up an efficient data warehouse are that you will introduce a standard way to store and retrieve all the data that you own as a company. Having a standard way to do this acts as the basic framework for accomplishing all other tasks that require data to function. It becomes the bedrock for you to build a data-driven business.

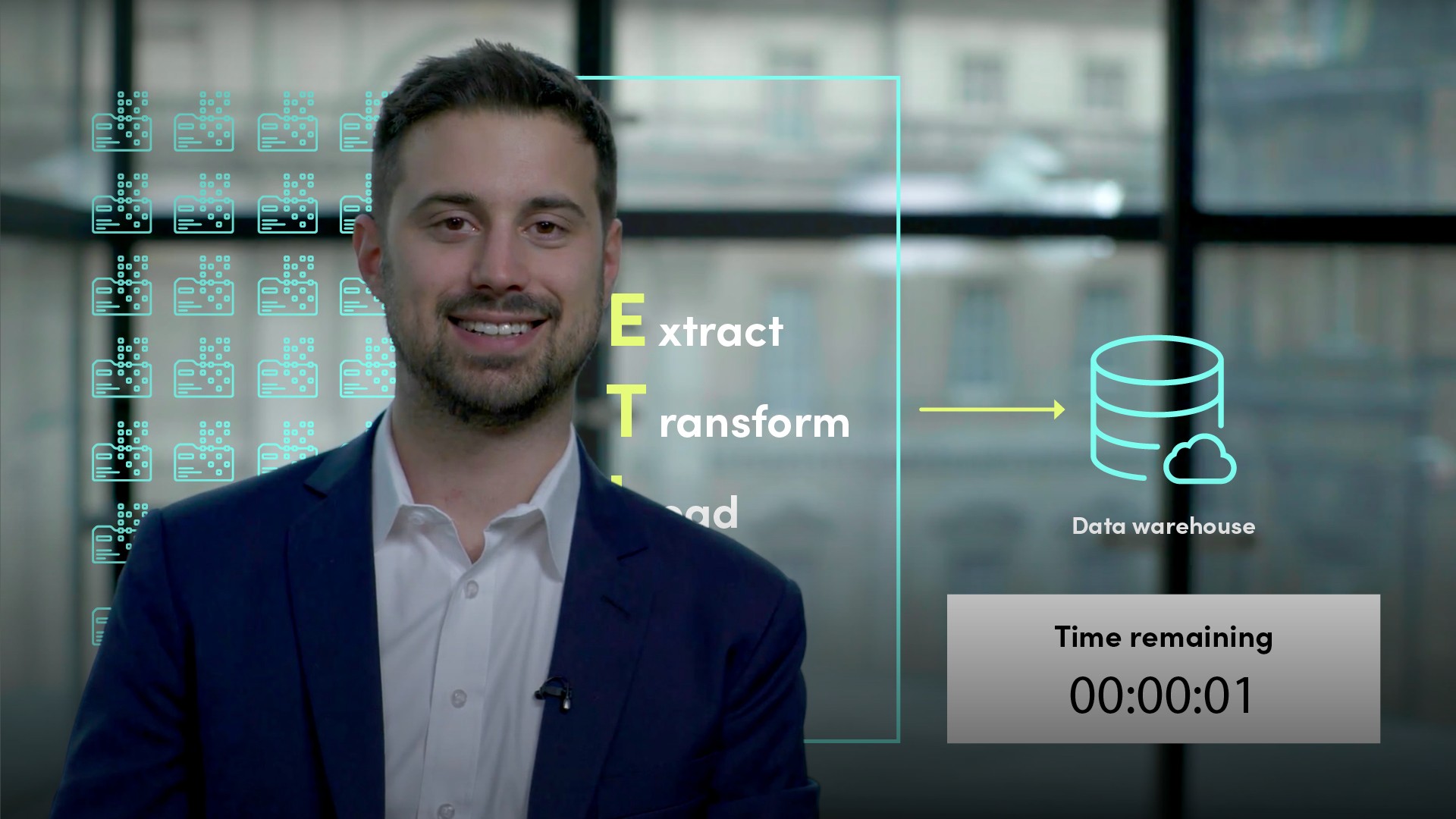

What is ETL Tooling?

Once we have an understanding of data warehousing, we can discuss what it takes to actually get data into a warehouse from all of the various silos and sources where they currently reside. Typically speaking, you will follow a process called ETL, which stands for Extract, Transform, Load. This describes the process of how data is extracted from a source location, transformed into a form that you ultimately want to consume it in, then it's loaded into the data warehouse where it will become accessible from a central location.

Regardless of the option you decide to pursue, it’s important to remember that ETL tooling is a requirement for the development of an efficient data warehouse. An investment in this stage of the process will make everything that comes after this easier, cheaper, and more efficient for the entire organisation.

What is Data wrangling?

The next part of the pipeline is typically some sort of data wrangling tool. This is taking your already ingested data and applying various transformations on the data to make it more relevant for analysis. This is things like mapping various source columns to destination columns, splitting one column into many or merging many columns into one. Think of very powerful Excel formulas but in a tool meant specifically for performing this type of workload.

What is Data Catalogue Technology?

Nearly everything we have covered in this series up until now has been related to how we take data from its native location, transform it into a standard product, and store it in a central location where it can be accessed. But getting data into a location like this is different to what is needed to then start taking it out and turning pieces of that data into insight.

Data Catalogue technology allows companies to display data within their organisation so that those who need to, can see what is available and request access. Having a solution to manage this visibility, track requests, and audit access is a major leap forward in the sophistication of a modern data warehousing solution. It will be a requirement as more and more regulation is developed around data security.

What are the uses of APIs and query languages?

Once a data scientist has found the relevant data, they are going to want to actually plug into it to start working with it. This is where APIs and query languages come in. They could simply download the data in a format that could be opened as a spreadsheet or other analytical software but the data will be stale the moment it lands on their computer. It would be better if they connected to the data directly so their visualisation and models will update as the underlying data updates.

APIs and Query Languages allow data scientists and engineers to constantly stream the most up-to-date data into whatever product, model, or solution they have built or manage. This is a far superior way to work when compared to downloading a version of data and spreading it around across multiple teams or applications. It enables that data to be constantly updated, so the solution it is powering is always up to date, everyone working with the data is using the same version, and access is monitored through usage.

What is Linked data?

One last thing to think about as you’re building out a data pipeline is how to link data once you have it all sitting in the same environment. One of the biggest challenges with bringing data sourced from many locations together is a lack of a common ID field. Without this field, you have to pick another field to use, such as a company name for example, but this carries a host of problems. An example of this are differences in the way company names are represented, such as one data set using “Apple” and another data set using “Apple Inc”. Our reasoning can tell us this is the same company but computers have a much harder time performing this task.

Subscribe to watch

Access this and all of the content on our platform by signing up for a 7-day free trial.

Brendan Stennett

There are no available Videos from "Brendan Stennett"